Tracking Down Human Actions with AI

What is a person doing right now and what conclusions can be drawn from it? This recognition and understanding of human actions is the main function of a new software library based on machine learning that researchers at the Fraunhofer Institute for Manufacturing Engineering and Automation IPA have developed. Its main advantage is its easy adaptability to new application scenarios.

Recognizing and interpreting human actions is a complex as well as promising task for computer vision. Human activities are diverse and often consist of several smaller sub-activities, in this context called actions. Being able to automatically recognize them, evaluate and, if necessary, derive reactions from them opens a wide range of possible applications: A worker’s steps in manual production could be recognized. If the process contains an error, the worker could receive messages in real time allowing him to react directly in a timesaving manner. In retail, such recognition functions help to analyze customer behavior so that, for example, products can be placed and offered in the best possible way. Finally, if a service robot that is used to support humans in their daily lives could recognize their current actions and intentions, the robot’s responses could be tailored directly and automatically. In particular, it could proactively offer its support without the user having to voice explicit instructions.

Universal Software Library

To implement these tasks, researchers at Fraunhofer IPA developed a new software library. Its advantage compared to existing systems: It can be easily adapted to new applications without the need for extensive training data. It is not only capable of recognizing individual actions of humans, but also of interpreting them as part of a larger activity and thereby detecting whether the human is performing them correctly. The software is the result of the EU research project Socrates. The aim of the project was to give machines an understanding of human actions.

The software consists of three subsystems: first, a basic module for action recognition, second, a module for teaching and recognizing application-specific actions, and third, a module for recognizing activities as sequence of actions and their correct interpretation. The modules can be used individually or in combination. To adapt them for a specific application, only small adjustments are required. Creating and processing the large amounts of data that are usually required is still a bottleneck of machine learning (ML) methods. Therefore, the developers have paid particular attention to meet the challenge of having sufficient training data available for the ML methods used.

Self-Learning Even Without Extensive Training Data

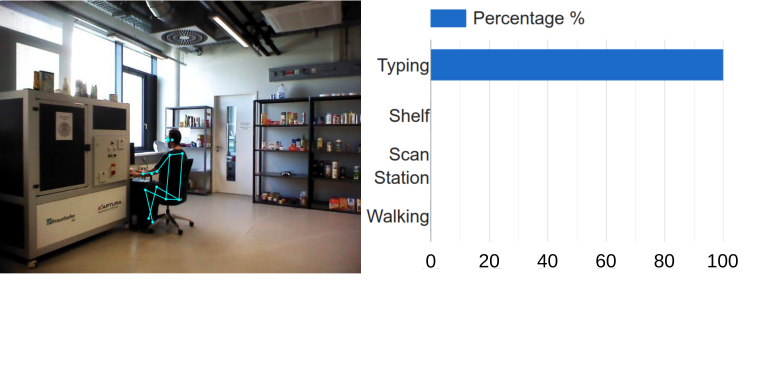

The basic module developed for action recognition uses a convolutional neural network to be able to recognize a person’s actions. For this purpose, a camera is installed in the operational environment. When a person enters the field of view, his joints are identified in the images and a virtual skeleton is created. To detect the skeleton, the software Posenet (Papandreou et al. 2018) is used. The detected skeletal movements are observed across several images and the data is bundled and transferred to the neural network. The neural network then classifies this data as actions such as “sitting down”, “typing on the keyboard”, etc. For the training of the network, publicly available datasets can be used, which contain data in sufficient quantity (several thousand videos of the actions to be recognized). The module mainly uses Pytorch and the Robot Operating System ROS, in addition to the software mentioned above.

Customizable Module for Teach-In

However, in most cases publicly available data sets do not contain the exact actions required for a specific application. Creating own data sets for training the above-mentioned basic module for action recognition on an application-specific basis would involve considerable effort and is therefore in most cases impractical. To make the methods available for practical use, Fraunhofer IPA has developed a new, customizable module for teaching and following recognition of application-specific actions based on the existing basic module.

By using clustering methods (DBSCAN [Ester et al. 1996]), the outputs of the first module are transferred into new actions. To learn the new actions, they must be executed only very few times in front of the camera. This self-learning behavior makes the software easy to train compared to other data and computationally intensive methods of deep learning and is accordingly well usable in an industrial environment. On the technical side, the module mainly uses Scikit and again ROS.

The third software module is used to recognize and analyze activities, i.e., a sequence of actions. For this purpose, the module uses the outputs of either the first basic module presented above or the customizable module for action recognition, the second module. An assembly task consists, for example, of the actions “grab component”, “screw”, and “place on table”. After these actions have been taught to the system and can thus be recognized, the activity analysis checks whether the actions have been performed in the correct sequence. Timely feedback to the worker as to whether he is performing an activity correctly can increase productivity, as errors are detected early.

Ready for Use in Three Steps

The process to use the presented three modules for a task analysis is the following: First, relevant actions for the individual application are defined. Then, they are taught to the software by executing them a few times in front of the camera. After that, the clusters that where automatically identified by the system can be given appropriate names by the user such as “grab component”. Based on that, the software can independently classify the executed activities. Thus, the software enables analyzing and understanding human actions without having to train the overall system for new actions or activities at great expense.

Since Fraunhofer IPA has also extensive knowledge in image processing beyond the presented software, the application can be extended, for example, by functions such as environmental or object recognition, to achieve an even better understanding of humans and their interaction with their working environments.

Author

Cagatay Odabasi, Project Manager in the group “Household and assistive robots“