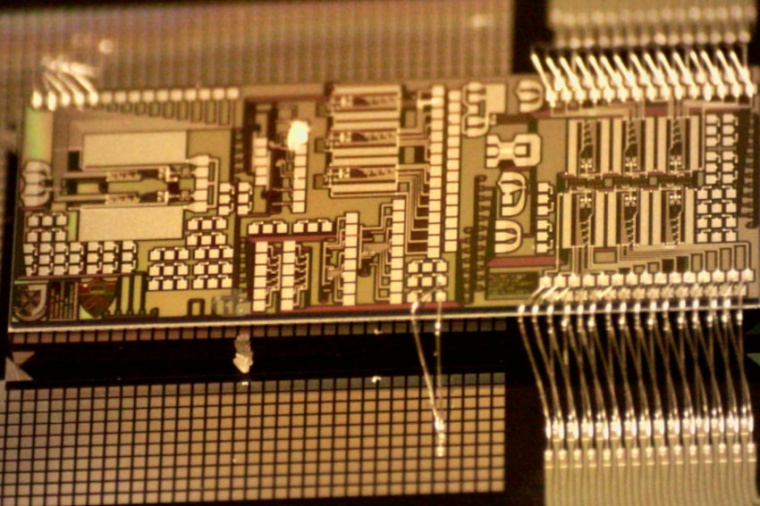

Teaching photonic chips to learn

A novel hardware will speed up the training of machine learning systems.

Before a machine can perform intelligence tasks such as recognizing the details of an image, it must be trained. Training of modern-day artificial intelligence (AI) systems like Tesla’s autopilot costs several million dollars in electric power consumption and requires supercomputer-like infrastructure. This surging AI appetite leaves an ever-widening gap between computer hardware and demand for AI. Photonic integrated circuits – optical chips – have emerged as a possible solution to deliver higher computing performance, as measured by the number of operations performed per second per watt used. However, though they’ve demonstrated improved core operations in machine intelligence used for data classification, photonic chips have yet to improve the actual front-end learning and machine training process.

Machine learning is a two-step procedure. First, data is used to train the system and then other data is used to test the performance of the AI system. Now, a team of researchers from the George Washington University, Queens University, University of British Columbia and Princeton University set out to do just that. After one training step, the team observed an error and reconfigured the hardware for a second training cycle followed by additional training cycles until a sufficient AI performance was reached – e.g. the system is able to correctly label objects appearing in a movie. Thus far, photonic chips have only demonstrated an ability to classify and infer information from data. Now, researchers have made it possible to speed up the training step itself.

This added AI capability is part of a larger effort around photonic tensor cores and other electronic-photonic application-specific integrated circuits (ASIC) that leverage photonic chip manufacturing for machine learning and AI applications. “This novel hardware will speed up the training of machine learning systems and harness the best of what both photonics and electronic chips have to offer. It is a major leap forward for AI hardware acceleration. These are the kinds of advancements we need in the semiconductor industry as underscored by the recently passed CHIPS Act”, Volker Sorger from the George Washington University said. “The training of AI systems costs a significant amount of energy and carbon footprint. For example, a single AI transformer takes about five times as much CO2 in electricity as a gasoline car spends in its lifetime. Our training on photonic chips will help to reduce this overhead”, Bhavin Shastri from Queens University added. (Source: GWU)

Further Reading: A. Gonzalez & D. Costa: Programmable chips for 5G applications, PhotonicsViews 19, 38 (2022); DOI: 10.1002/phvs.202200002