Making Science Fiction Fact

The processing power of computers may have improved by many orders of magnitude since the computer was first invented but the way in which we interact with such devices has changed very little over the same time period. Vision systems that interpret information from twin cameras are beginning to change this, with the creation of futuristic interfaces that go beyond those dreamt of in science fiction.

If you ask someone on the street how you can interact with a computer they will most likely come back with three methods - keyboard, mouse, touch screen. And this means that very little has changed in nearly four decades. The mouse and keyboard have been used as the de facto controller since the Xerox Alto was developed in 1972. And even the touch screen, which is now becoming ubiquitous in smart phones and tablet PCs, has been around since the early 1970s - Elo TouchSystems announcing the first touch screen in 1974.

These touch control systems proved popular in heavy industry and in areas, such as room automation, where keyboard and mouse systems do not allow a satisfactory, intuitive, rapid, or accurate interaction by the user with the display‘s content.

But, stereoscopic vision systems are enabling new ways of communicating with the digital world. These new systems are more intuitive to use and, in many ways, reminiscent of science fiction films such as the 2002 Tom Cruise movie Minority Report.

Beyond Sci Fi

Minority Report envisioned a computer interface that was capable of tracking hand and finger movements. In the film, gestures, from pointing to grabbing and from opening to waving, were interpreted to control and manipulate data on the screen. But, the accuracy, precision and increasing bandwidth of today's cutting edge vision cameras enables more than just finger control.

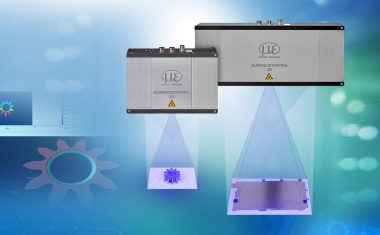

Of particular note is an interface created in Switzerland, through a collaboration between Atracsys and Sony Image Sensing Solutions. The result is called Interactive Communication Unit, or ICU for short. At the heart of ICU are two high-specification IEEE1394.b cameras that track a user's precise three-dimensional movements; and even their emotions. The cameras are linked to a computer which, in turn, instantly updates the content displayed on-screen.

ICU has utilised two Sony XCD-V60 cameras which are linked to a standard CPU. It is understood that the cameras will be upgraded to the XCD-SX90, which benefits from 1/3 type PS IT sensors.

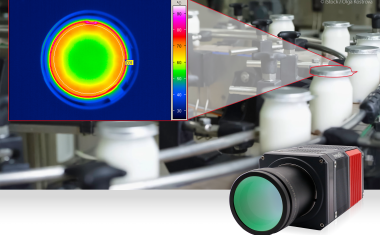

The subtle characteristics that ICU is able to detect makes the vision system ideal for applications such as marketing. A users age and sex can be determined and moods including happiness, anger, sadness and surprise can be interpreted. This is done by tracking, for example, the upturn of the mouth or the widening of the eyes both of which accompany a smile. Using two cameras allow these to be tracked through three dimensions and enables a computer interface that more deeply immerses a user within an application, be it for industrial, marketing or gaming purposes.

Tracking Options

Getting rid of the mouse: By using a ste-reoscopy vision system it is possible to precisely determine where a person is pointing or, indeed, where a person's focus lies. This enables to move a mouse pointer with the finger or gaze and a secondary movement, such as a smile or nod, is used to simulate a ‘click'.

For marketing applications, which the technology is currently being used for, this gives access to web-based content to deliver additional information on a product or service. For example, a person staring at a watch or gadget in a shop window could gain access to reviews or the official website to find additional, relevant content.

And, similar to a touch screen's multi-touch capability, vision systems are also able to interpret multiple gestures; one such example is circular menus, whereby the cursor is piloted by moving the head left/right or up/down.

Positioning correctly: Be it a window display or an industrial application, people are rarely in the optimal position to view and interact with content. Stereo vision enables the computer to calculate precisely where a user is. Taking this information into account, it is possible to adjust the data displayed using electronic 3D rendering effects to best meet the user's needs.

Mood control: By detecting the smallest of movements in a person's face, ICU can be taught how to interpret a person's response. As marketing applications is one of the key sectors predicted by Atracsys to adopt the technology, this sensitivity of motion detection will prove to be vital. Content can be adjusted to not only tailor to a demographic scope, but to precisely tailor to a person's moods.

Gaming applications: By moving the entire body, a user can simply and successfully interact within gaming content. Simple algorithms running on the CPU and tailored to each application would allow avatars to mimic real life activity. For example, a classic such as the space invader game of the 1970s could be controlled by a player's left and right movements to steer the spacecraft accordingly. This interactive capability has important consequences also beyond games and marketing applications. In industrial applications, for example, users would be able to control robot arms remotely using simple hand or arm movements.

Future Applications

Improving computer interfaces to make them more intuitive and involving is essential. Beyond commercial applications, interfaces based on vision systems could also play a strong role in the medical world. A potential application of this is in the operating theatre. Surgeons would be able to access electronic data without being forced to touch a computer mouse or keyboard - and therefore maintaining sterile conditions.

Stereoscopic vision systems have enabled the biggest change in computer interactivity since the mouse and touch screen were developed in the early 1970s. The technology behind ICU can be used for virtually any purpose and can be adapted to the needs of each application. The limitations come primarily from the capacity to imagine interesting content and from space restrictions that will complicate features such as multi-user experiences.

The film Minority Report brought the concept to the world's attention and it has taken less than seven years to develop the technology from this point and make science fiction real.