Fast Mover: Freeze motion imaging and event recording

From the seminal work of Eadweard Muybridge in the late nineteenth century analysing the gait of a galloping horse, high-speed imaging has a rich history of providing new information about motions, events, and processes. In today's industrial and scientific environments, high-speed imaging is predominately used for scientific analysis or test and measurement applications, with vehicle collision studies probably the most commonly recognized. Other significant applications include particle imaging velocimetry (PIV) whereby images are taken of fluid flows and particle velocities extracted as a tool for aerodynamic engineering, human motion analysis, as well as failure mode dynamics and analysis, impact testing, and ballistics research. In each case it is the insight that is obtained by viewing events happening on a timescale much faster than humans are capable of visualizing, which provides the ultimate value to the user.

Factors to consider for high-speed imaging

High-speed imaging relies on the ability to capture an optical image of a scene in a short period of time. Using shorter periods of time for scene capture, generally corresponds to an increased ability to 'freeze' fast moving events. It is this ability to capture short moments in time which provides the useful diagnostic information used in applications. A typical approach to capturing an image in a short period of time is to simply decrease the exposure time of the camera. Typical image sensors used within machine vision cameras have minimum exposure times in the range 5-15 microseconds, whilst dedicated high-speed imaging systems can operate with exposure times lower than 200 ns. If the object being imaged is fast moving, an insufficiently short exposure time will result in a blurred image. For example a typical 10 microsecond exposure time, can be used to image a vehicle travelling at 360 km/h resulting in a relative motion blur of 1 mm. This may be acceptable for large vehicles with dimensions of several metres, but the example of a high-velocity bullet 20 mm long and travelling at 1200 m/s results in an exposure time of approximately 83 ns in order to reduce motion blur to less than 0.1 mm.

Motion blur is not only a matter of image appearance. In case of metrological applications, such as inkjet nozzle analysis, the quality of the object edge is important. If the required precision is in the range of micrometers, the exposure 'freezing' time must be below 200 ns. Such short acquisition times are out of reach of ordinary cameras.

In order to achieve a well exposed image, sufficient light must be captured from the scene by the image sensor. For very short exposure times, this requirement becomes increasingly difficult to achieve. Large pixels with high optical sensitivity can be designed, but this can add cost and size to the overall camera system. Faster optics can help up to certain physical limits, but at the expense of increased system cost, size, and weight. With these high numerical aperture imaging optics, a further trade-off is in the resultant depth-of-field of the imaging system; low f-number lenses capture more light but the depth-of-field is reduced.

If an event is repeatable, then the signal from repeated, synchronized captures can be accumulated to achieve the required exposure level. However, simply accumulating images provided by a conventional high-speed camera may not give the desired results; additional requirements must be met by the camera system to ensure that the signal is properly accumulated without also accumulating noise. Some systems meeting these requirements are able to perform the accumulation function internally, reducing measurement time and data bandwidth that must be output from the system.

More usually, events are not repeatable and must be captured immediately, with a single exposure. In this case, high-brightness external illumination sources can be used to illuminate the scene, and so provide the required light levels in short periods of time. An additional concern here is balancing the high brightness required with eye safety for users. The use of pulsed illumination, synchronized with the image sensor exposure, can reduce the overall optical intensity to safe levels, avoiding the need for continuous high-powered illumination. The use of high-brightness pulsed illumination is well known from conventional sources such as Xenon flash lamps. However, these offer pulse duration, latency, and jitter, on the scale of microseconds, which may not be fast enough in many applications.

A related consideration for high-speed imaging is the required frame rate of the system; the rate at which sequential full images of the scene can be captured. In principle, the time period between capture of sequential frames cannot be shorter than the exposure time, but is generally substantially longer, as electronic readout of the image takes place. Often, the frame rate can be increased by setting the camera to capture a lower resolution image, for example reducing a 1280x1024 image to 128x128 could result in a more than 20x increase in frame rate from 450 fps to 10,300 fps. An additional consideration is whether all the images are required to be transmitted to a host PC at the full frame rate, or whether a sequence of images can be stored directly on the camera itself, for subsequent transmission to a host PC. Full frame rate data transfer generally implies a higher system cost as high bandwidth transmission protocols are needed, together with additional components such as framegrabbers. Conversely a more cost-effective solution can be realized using on-board storage, however limitations exist in the number of image frames which can be stored before the memory space is full.

Pulsed illumination can capture events on the nanosecond timescale

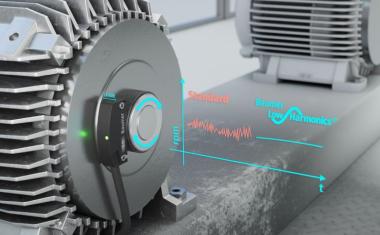

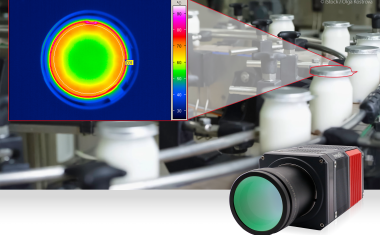

An alternative approach to capturing short timescale events, is to use very short pulses of light but with high intensity, synchronized to the exposure time of the image sensor within the camera. This technique can provide a simple and cost-effective route to high-speed image capture with good contrast. Critically, the time period which 'freezes' the motion is set by the pulse width of the illumination, rather than the imaging system, allowing image sensors with conventional pixel architectures to be used, Figure 2.

Although using short illumination pulses to freeze time is an attractive approach to high-speed imaging, but the output frame rate of the system remains governed by the image sensor. Extremely fast events can be captured, for example the 83 ns required for high-velocity bullet imaging would be simply achieved, however the time period between captures could be longer than with using a specialist or dedicated system.

Tight control and synchronization of illumination pulses, directly from the camera system itself, can enable critical analyses to be undertaken. For example, two illumination pulses can be triggered within a single exposure cycle of the image sensor, Figure 3. This results in two physical images, captured with an extremely well defined interval between them, to be optically overlaid on a single output image (Figure 4). As an example, this technique has been used to capture images of high velocity bullets in directly after exiting from a rifle barrel. A key advantage of using such a 'double' pulse, is that the time between illumination pulses can be extremely fast and also extremely precise, for example 10 ns intervals with less than 50 ps jitter are possible. This enables precise measurement of time related parameters, such as particle velocity, directly from image analysis. Firing multiple pulses within a single exposure time is a natural extension to the concept, with the additional flexibility of complete freedom of sequencing of the illumination pulses.

Synchronization and triggering are important features

Careful control of timing is a pre-requisite for most high-speed imaging applications. Very precise synchronization of illumination pulses can be used to probe events happening on these nanosecond timescales. Well designed trigger interfaces are required on the camera itself, generally capable of initiating image capture in response to a hardware trigger. A critical parameter of external triggering, is the need for well defined and repeatable latency between the input of a trigger signal and the the sequencing of illumination pulses and the image sensor exposure. Substantial jitter of this trigger latency can cause the measurements to be imprecise, or worse, fail to capture the event of interest.

A useful feature of systems which have the ability to store image frames directly on the camera itself, is the ability to define post-event triggers. A typical example is to capture an event whose exact timing was not known in advance, such as the precise moment of failure of a component under test. In this case, the on board memory can be configured as a ‘ring’ buffer, whereby images are continuously captured and stored into a memory 'ring'. When the last memory space in the ring is used, the next image frame overwrites the first space in the ring. When combined with a post-event trigger input, this allows the the system to have captured the critical image frames leading up to and during the event of interest.

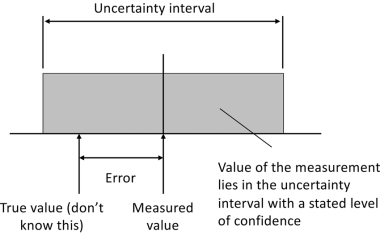

Linear pixel response enables quantitative analysis

Typical applications for high-speed imaging are often found in test and measurement environments. These applications often relate to specific events or processes of particular interest, in contrast to the repetitive quality based inspection tasks often found in manufacturing vision applications. An advantage for cameras deployed in test applications, is for each pixel to have an identical and linear response to light. In general, the raw response of a pixel to light is non-linear, often showing decreasing sensitivity with increasing signal level, and each pixel can have a different non-linearity. Many industrial cameras correct the basic offsets between pixels, but stop short of providing an identical linear response across the whole array. Two major advantages are realized by a uniform linear response: firstly quantitative intensity analysis is possible, and secondly, an identical response assists in post-processing of images, particularly where high levels of digital gain are used to enhance images and bring out fine details.

Odos Imaging have recently relased the StarStop Freeze motion camera kit to provide these benefits, by assembling dedicated components which are able to provide ultra-short strobe illumination coupled with a high-speed burst recording camera. The core functionality includes highly configurable trigger IO and breakout as well as the software to simply configure and capture high contrast images. The kit is designed to directly address the needs of professionals who require fast, flexible analysis without the inconvenience and cost of integrating multiple hardware components.