Enabling Terahertz Light-field Imaging Using Fully Integrated Silicon Components

Development of a Modular THz LF Camera

Light-field (LF) imaging is a highly versatile computational technique for 3D scene reconstruction, depth estimation and content editing. Combining LF with terahertz detectors to perform real-time see-through imaging will lead to novel applications in security, medical and industrial applications.

Why perform Terahertz LF imaging? The terahertz frequency (wavelength) range, defined between 300GHz (1mm) – 3THz (0.1mm), offers unique advantages for imaging and sensing applications. As compared to the lower frequency microwaves or millimeter-waves (mm-wave), terahertz waves offer a finer, sub-mm scale image resolution comparable to a human eye which is good enough to resolve shapes of most common objects in everyday life. Terahertz waves are strongly absorbed by water molecules, reflected by metals, and transparent to the dielectric materials such as daily use plastics very similar to x-rays. However, the X-rays possess large energy photons which can cause ionization of biomolecules such as the human genetic structures, and therefore X-ray doses must be limited and shielded to prevent unintentional exposure. Comparatively, THz waves do not come with such side-effects, and they can be operated freely. As a result, THz imaging exhibit strong potential in security screening, package inspection, quality control, biomedical diagnosis, and art conservation. Until recently, the attention is focused towards performing traditionally 2D imaging due to the limitations of the THz hardware components. The state-of-the-art THz transmitters and receivers are either weak in performance or lack sufficient integrability to be implemented in sophisticated computational imaging techniques such as Light-field Imaging.

Light-field (LF) imaging is a robust computational technique based on ray-tracing geometry for 3D scene reconstruction, depth estimation and content editing. Here, the light flowing into and out of the object is considered as a vector field composed of ray bundles, which are quantified in terms of the energy density along a specified direction and position in the 3D space. If the position and flow direction of individual light rays are known, either back-propagation (from the sensor) or forward propagation (from the source) can be applied to reconstruct a 3D volume source of the field disturbance. The light-field method works with incoherent radiation, but it requires spatio-directional sources and detectors, i. e. components which can synthesize and sample the light along multiple angles across different spatial locations. Isotropic, incoherent sources are excellent at generating spatio-directional light-fields that can be readily sampled by a camera. Light-field techniques have become really popular for visible light imaging due to the ubiquitous availability of such sources and inexpensive cameras.

How Light-Field Imaging Works

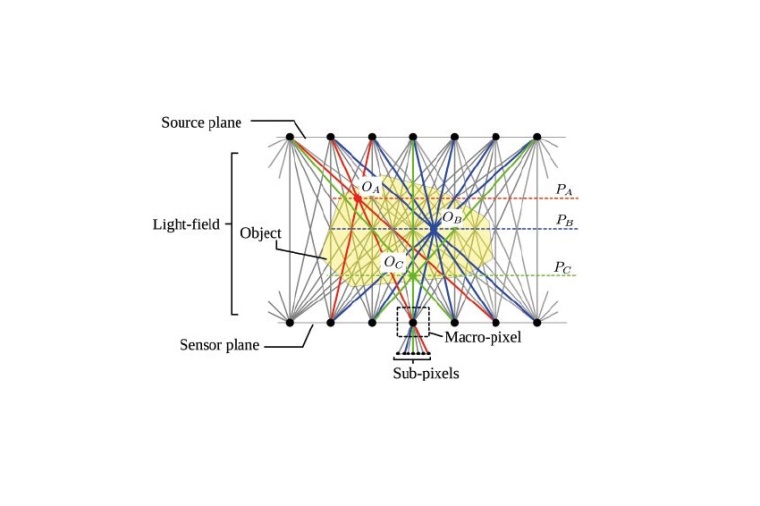

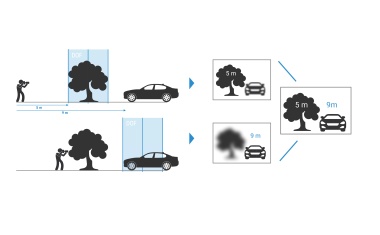

The fundamental idea behind light-field imaging is to map the space between two planes, one classified as the source plane and other classified as the sensor plane based on the direction of energy flow, as the vector fields. Such representation may encompass one subspace of a full light-field system which might also consist of other components such as lenses and mirrors. It is assumed that the sources and sensors are isotropic, i. e. they radiate and capture energy respectively along all directions. Assuming that there are no wave effects (diffraction and interference), the straight lines joining the sources and the sensors form the light-rays. An object placed between the two planes will partially transmit and partially reflect thus perturbing the light-field. If all the spatio-directional light-rays can be sampled at the sensor plane, ray-tracing, and integration of light intensity along different sets of rays in post-processing can allow focusing on different parts of the object (including its depth axis). The concept can be extended to multiple sets of rays to acquire 2D images corresponding to planes different planes in the scene forming cross-sections along the object depth, leading to a 3D reconstruction.

How do we sample the light-field? If each point on the sensor plane is a single pixel, all the light rays captured within this pixel are integrated in intensity, and the spatio-directional information is irrevocably lost. Instead, multiple sub-pixels are required at each sensor position (macro-pixel) to sample the incident light along different directions. This macro-pixel is the key building block for any light-field imaging system. The object sampling density corresponds to the discretization of the light-field, which is associated with the spatial and angular arrangement of the sub-pixels and the macro-pixels.

Light-Field Imaging at Visible vs Terahertz Wavelengths

The first demonstrations were based on scanning a scene by moving a single camera, for applications such as modelling of a 3D illumination source, virtual imaging illustration, digital archiving of renaissance art sculptures, and the interactive city panoramas. Compact light-field camera designs have been demonstrated, consisting of a 2Darray of micro-lenslets arranged over a digital camera sensor. This technology is now commercialized for 3D imaging and computational aperture synthesis in photography and 3D microscopy. Some of these advancements have led to the multi-camera setups available widely in modern day smartphones.

There are many similarities between the visible light and the incoherent THz light-field systems. Both require spatio-directional sensors and diffused illumination. The plenoptic function for both is real valued and the intensity drops by 1/r² for a distance r away from a point source for a spherical wavefront in the far-field. However, there are also significant differences between THz and visible light presenting unique challenges for THz light-field systems.

First, the wavelength at THz is nearly three orders of magnitude larger than the visible light. This severely limits the dense packaging of the sensing pixels due to larger scale diffraction limits. Also, high intensity isotropic incoherent THz sources do not exist so far. It is not possible to do computational light-field imaging in the absence of appropriate illumination. The same is also true for THz sensors. Another particularly important challenge at THz is the low integration density, as only a few hundreds of THz sensors can be integrated within a focal-plane array (FPA) as compared to visible light sensors with millions of pixels. This directly limits the light-field density and the achievable image resolution. The lens-arrays that are required to capture the spatio-directional light-field in compact cameras are also not available at THz frequencies.

Capturing Spatio-Directional Light-Field Diversity in a Multi-Chip Terahertz Camera SoC?

The preliminary requirement for performing LF imaging is to have large focal plane arrays (FPA) of detectors capable of resolving spatio-directional diverse light-fields. Could a fully integrated THz digital camera comprising of 2D arrays of antenna-coupled CMOS direct detectors coupled to a high-resistivity hyper-hemispherical silicon lens be implemented to capture spatio-directional light-fields?

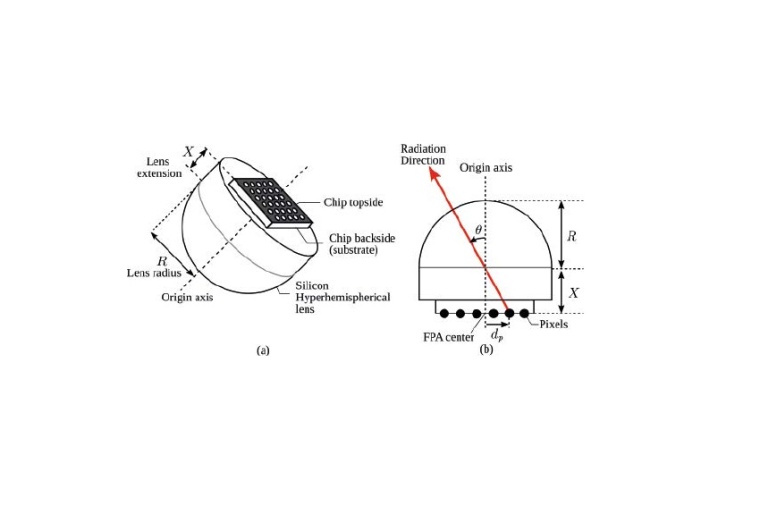

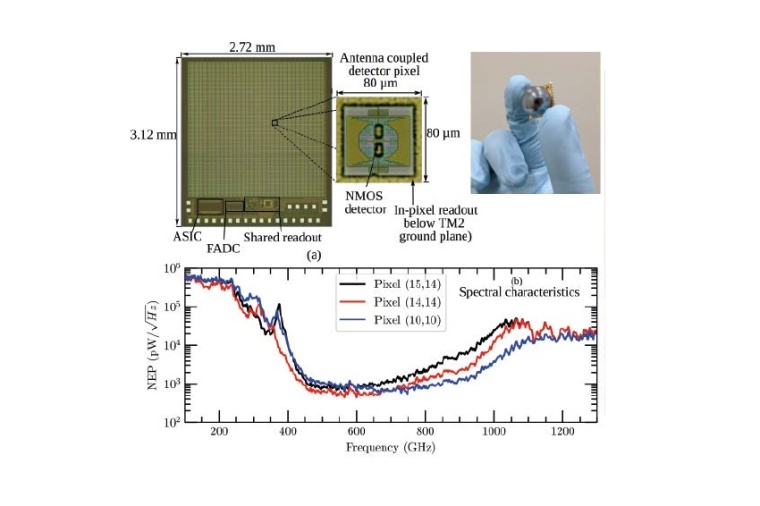

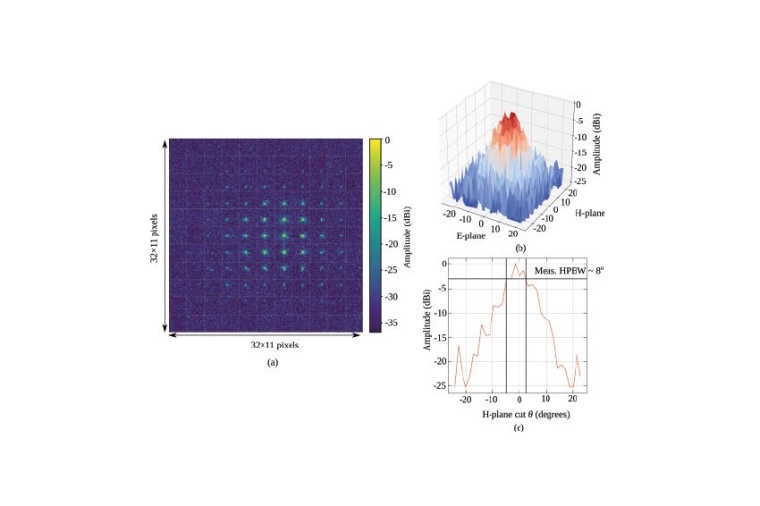

In silicon lens-integrated THz FPA configuration, the antennas radiate from the backside (toward the substrate) of the chip. The extension length of this lens is selected such that the pixel-array is located at the focal point of an approximate ellipse formed by the hyper-hemispherical lens. This allows for pixel to angle mapping implying that spatial location of a pixel on the array corresponds directly to the receive beam angles. The mapping is deterministic, and it can be predicted to the first order with a pinhole image formation mode. In effect, the silicon lens plays the role of a macropixel and the 2D arrays of detector pixels play the role of a subpixel providing directional diversity. A fully integrated THz Light-field subpixel consists of 32 x 32 antenna-coupled CMOS THz power detectors. These detectors exhibit broadband spectral characteristics with best operating frequency at 600 GHz and a 3dB bandwidth of nearly 300 GHz. The detectors are activated in a rolling shutter mode through an on-chip row and column selection logic, and the programmable gate arrays, correlated double sampler (CDS) for offset cancellation and ADC are globally shared across all detectors.

A full-fledged THz light-field camera SoC is realized by implementing large arrays of such macropixels and routing the control logics on a common motherboard. Such multi-chip scaling approach is a convenient way of keeping fabrication costs under check along with retaining the advanced functionality of fully integrated THz digital cameras. Having modular design permits to flexibly scale the THz light-field imaging system and individual CMOS THz digital cameras can be replaced conveniently.

An Example of 3D Reconstruction

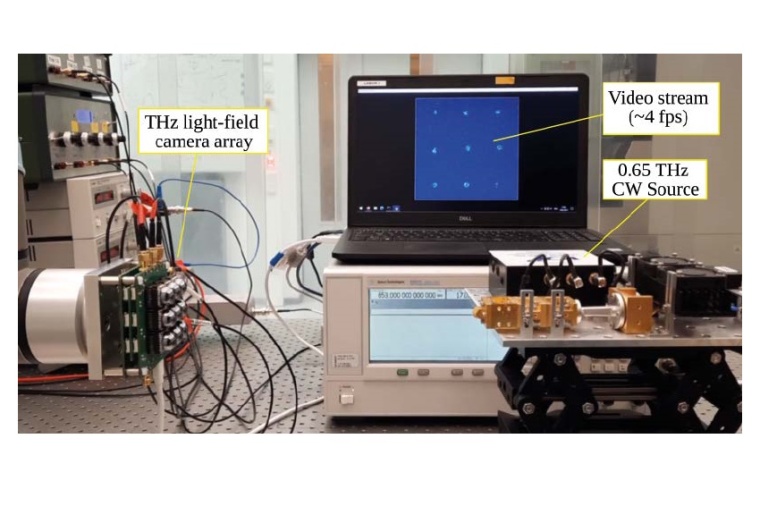

The THz light-field camera was used for a real-time imaging demonstration. The camera was placed in front of a 650 GHz CW AMC source, and the light-field image data was streamed out in form of a video at an overall framerate of 4 fps with each sub-camera streaming at 36fps. The imaging speed was limited by the controlled implementation – individual commands were sent in sequence from a python interface to trigger the data acquisition from each camera. For purely demonstration purpose, the 3x3 array of THz light-field camera was stepped in 2D to capture the light-fields of a diverging source. The spatio-directional data collected are then computationally processed to reconstruct the radiation pattern of the AMC source.

This work received partial funding from the European Research Council (ERC) under the European Union's Horizon 2020 research and innovation programme (grant agreement No 101019972).

References

[1] R. Jain et al., “A 32× 32 pixel 0.46-to-0.75 THz light-field camera SoC in 0.13 μ m CMOS,” 2021 IEEE ISSCC 64, 484-486.

[2] M. Levoy, “Light Fields and Computational Imaging,” Computer, vol. 39, no. 8, pp. 46-5, Aug. 2006.

[3] R. Ng et al., “Light Field Photography with a Hand-Held Plenoptic Camera,” Stanford

University Computer Science Tech Report (CSTR 2005-02), Stanford University, 2005.

[4] R. Jain et al., “Terahertz Light-Field Imaging,” IEEE Trans. Terahertz Science and

Technology, vol. 6, no. 5, pp. 649-657, Sept. 2016.

Company

Bergische Universität WuppertalGaußstraße 20

42119 Wuppertail

Germany

most read

Hyperspectral Imaging for Surface and Layer Analysis

Optical Wafer Inspection

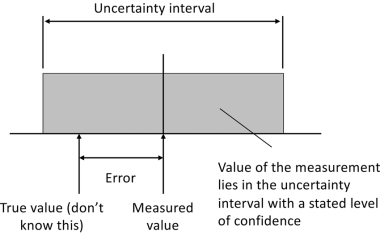

Measurement uncertainty in manufacturing: Understanding the basics

Why precise measurement results alone are not enough

Optical Metrology Technology: Focus-Variation and its Advanced Extensions

Basics of Measurement Technology

Sensor Fusion in Outdoor Applications

AI-Driven Collision Warning System for Mobile Machinery

Targeted Grasping of the Right Object

2D Images Combined with Deep Learning Enable Robust Detection Rates