Best Starting Position for Visual Intelligence

The Current Status of Embedded Vision

The term “embedded vision” is on everyone’s lips. The concept behind it is not new, but new possibilities arise with the hardware developments in recent years – an overview.

There are many embedded solutions and products on the market. The tenor of the advantages can be reduced to a common denominator across all suppliers: Embedded vision products are small, modular, platform-independent, powerful and have low power consumption despite good performance. In short, these are exactly the features that are desired for the vast majority of machine vision applications.

Of course, the question arises why these products with this selection did not exist earlier. The simple answer is that these types of products have been around for a long time, just not with this level of performance. This is because the goal of embedded vision systems is usually to bring the complete machine vision system, including hardware and software, into the customer’s housing. In other words, the camera is located relatively close to the computer platform and the application-specific hardware is embedded in or connected to the system. Additional components such as lighting, sensors or digital I/Os are integrated or provided.

Vision-in-a-Box

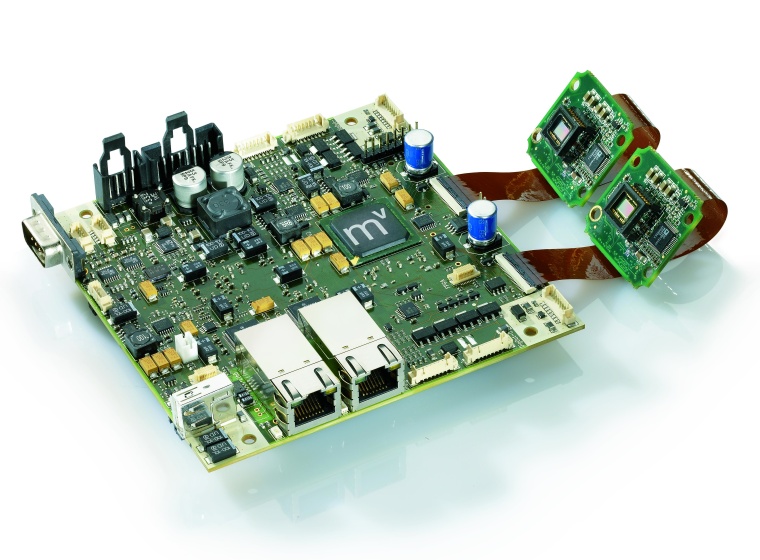

This vision-in-a-box approach is naturally suitable for many applications. A suitable example from Matrix Vision is an application for a british traffic monitoring company for license plate recognition. The application has two remote sensor heads with different image sensors, one for daytime and one for nighttime use. The LED illumination is housed on a separate circuit board. Everything together is connected to a power PC-based circuit board with FPGA. An image is triggered via the integrated digital I/Os, the FPGA extracts the license plate from the complete image and sends the AOI via the network to a cloud server which uses OCR to determine the characters. Anyone who now wants to argue that Power PC is yesterday’s news can agree. The example mentioned is already over 13 years old and is based on a smart camera that has been customized. And as the example shows, smart cameras or intelligent cameras in the broader sense are vision-in-a-box systems, proving that embedded vision has been around for a long time. Although the performance of the intelligent cameras limited the possible applications, where the intelligent cameras could be used, they still do their work reliably today.

Edge Computing: Decentralized Data Processing

License plate recognition is also an example of edge computing. Edge computing is known to be the approach of decentralizing data processing, i. e., performing the initial preprocessing of the aggregated data at the edge, so to speak, to the network and then performing the further aggregation in the (cloud) server. In the Internet of Things (IOT) embedded vision provides the machine vision solution for edge computing. This makes it clear that even with Industry 4.0, the requirement profile of embedded vision has not changed much.

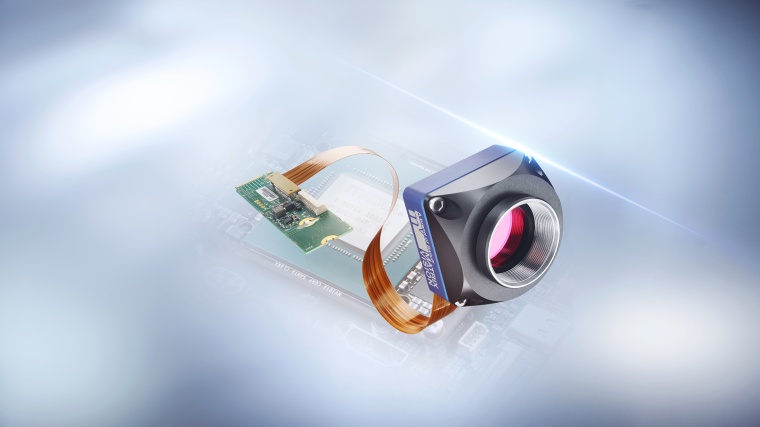

What has changed, on the other hand, is the power requirements of the potential applications due to higher resolutions and frame rates, which is also driven by the new image sensor generations. Here, machine vision is benefiting as a “free rider” of technology – and embedded vision in particular – from the rapid developments in the smartphone market. It is thanks to this market that the importance of ARM processor architectures is steadily growing and corresponding system-on-a-chip (SoC) from Nvidia, Broadcom (as the basis of the Raspberry Pi) or NXP, for example, as well as system-on-a-modules (SOM) such as Smarc or COM Express now play the main role as powerful computing units with low power consumption. Via carrier boards, the system-on-units become executable and can serve as a “mainboard” for vision-in-a-box.

Through cooperation with the customer, carrier boards can also be further integrated into the customer application through individualization – another aspect of how embedded can be interpreted. This gives the customer a chance to stand out from the competition and do better.

Getting the Big Image Data to the Computing Unit

Back to the higher resolutions and frame rates; these bring yet another problem to light: How do you get the amount of data from the image sensor to the computing unit? A 12.4 MPixel fourth generation Pregius image sensor from Sony at 10 bit (ADC) and 175 frames per second at 8 bit results in 2,170 MB/s of data. This is where many interfaces give up.

Matrix Vision has therefore decided to use PCI Express (PCIe). It is very well standardized across all platforms and, above all, is scalable. Depending on lanes and design, net bandwidths of 3,200 MB/s are possible, with image data written directly to memory with almost no latency. This means that PCIe can actually meet any application requirement.

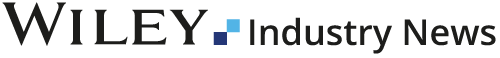

With the launch of the PCIe-based embedded vision product series, Matrix Vision has created a modular system, centered around the high-end embedded camera series MV Blue Naos. The camera can be flexibly assembled from different components such as lens, filter, lens holder, sensor, housing, etc. according to the application. Various adapters for PCIe and M2.M are available for connection to a carrier board via the all-in-one interface “Naos for Embedded” (N4e).

The cameras have low power consumption and support ARM SOCs and Intel SOMs such as Smarc and COM Express, with low CPU/GPU load. They enable multi-camera systems and support the AI units of the SOCs. In the image acquisition programming interface “MV Impact Acquire SDK” PCIe is implemented as GenICam GenTL producer and consumer. This enables customers to migrate existing solutions with little effort.

Mipi is also often mentioned in connection with embedded vision. Mipi in itself is also a kind of standard in ARM mobile processors, but rather conditions a hardware driver for a specific SOC and a specific Mipi sensor (and thus a different one for each SOC and image sensor). The image sensors used in conjunction with Mipi are rather low-end and placed more at USB3 in terms of their frame rate. Provided the performance is sufficient, Mipi can be an attractively priced solution.

The last piece of the mosaic that is still missing for embedded vision is a standard that corresponds to GigE Vision and USB3 Vision. The European Machine Vision Association (EMVA) has set the course for this with its standardization initiative at the end of 2019. The starting position for embedded vision could not be better.

Author

Ulli Lansche, Technical writer at Matrix Vision

Company

Balluff MV GmbHTalstr. 16

71750 Oppenweiler

Germany

most read

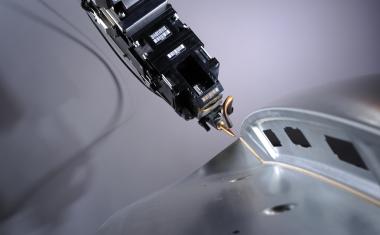

Automatic Defect Detection in Laser Welding and Brazing with AI

Process Monitoring in Automotive Production.

There’s a Large Hippo Resting in the Mud

Virtual Video Safaris for Blind and Visually Impaired People

Systematic Characterization of Ring-Shaped Laser Beams

New metrics for meaningful analysis beyond ISO 11146.

Spectronet Collaboration Conference 2025

International Conference for Photonics and Machine Vision

The Rise of Photonic and Neuromorphic Computing: A New Era for AI Hardware

Computer Architectures for future data processing