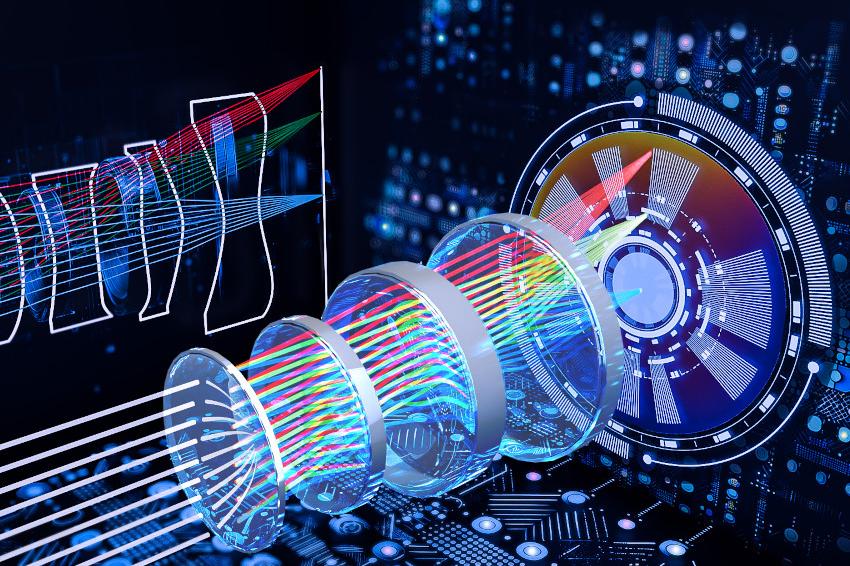

Deep learning improves lens design

28.08.2024 - An automated scheme for optical lens design looks set to enhance mobile phone cameras.

An automated computational approach to the optical lens design of imaging systems promises to provide optimal solutions without human intervention, slashing the time and cost usually required. The result could be improved cameras for mobile phones with superior quality or new functionality. Developed by Xinge Yang, Qiang Fu and Wolfgang Heidrich at KAUST, the DeepLens design method is based on the concept of “curriculum learning” that uses a structured, iterative, staged approach that considers the imaging system’s key parameters such as its resolution, aperture and field of view.

Artificial Intelligence systems, like humans, have trouble learning complex tasks from scratch without guidance. For example, humans learn to crawl, stand and then walk, before they can ultimately learn to jump, dance or play sports. Similarly, curriculum learning breaks down a complex task – in this case the design of a complex lens system – into individual milestones of increasing complexity, incrementally increasing the demands on resolution, aperture size and field of view. Importantly, the scheme does not need a human-based design as a starting point. Instead, it can fully create its own design of a compound optical system featuring a series of several refractive lens elements, each with its own customized shapes and properties, in order to provide the best overall performance.

“Traditional automated methods only achieve minor optimizations of existing designs,” commented Yang. “Our approach can optimize complex lens designs from the beginning, drastically reducing the months of manual work by an experienced engineer to just a single day of computation.” The approach has already been shown to be highly effective in creating both classical optical designs and an extended depth-of-field computational lens. This was in a mobile-phone-sized form factor with a large field of view using lens elements with highly aspheric surfaces and a short back focal length. It has also been tested in a six-element classical imaging system, with the evolution of its design and optical performance analyzed as it adapts its design to meet the design specifications.

“Our method specifically addresses the design of multielement refractive lenses, common in devices from microscopes to cellular cameras and telescopes,” explained Yang. “We anticipate strong interest from companies involved with mobile device cameras, where hardware constraints necessitate computational assistance for optimal image quality. Our method excels in managing complex interactions between optical and computational components.”

At present, the DeepLens approach is only applicable to refractive lens elements, but the team says that it is now working to extend the scheme to hybrid optical systems that combine refractive lenses with diffractive optics and metalenses. “This will further miniaturize imaging systems and unlock new capabilities such as spectral cameras and joint-color depth imaging,” concluded Yang. (Source: KAUST)